Read File in PowerShell: Your Ultimate Guide

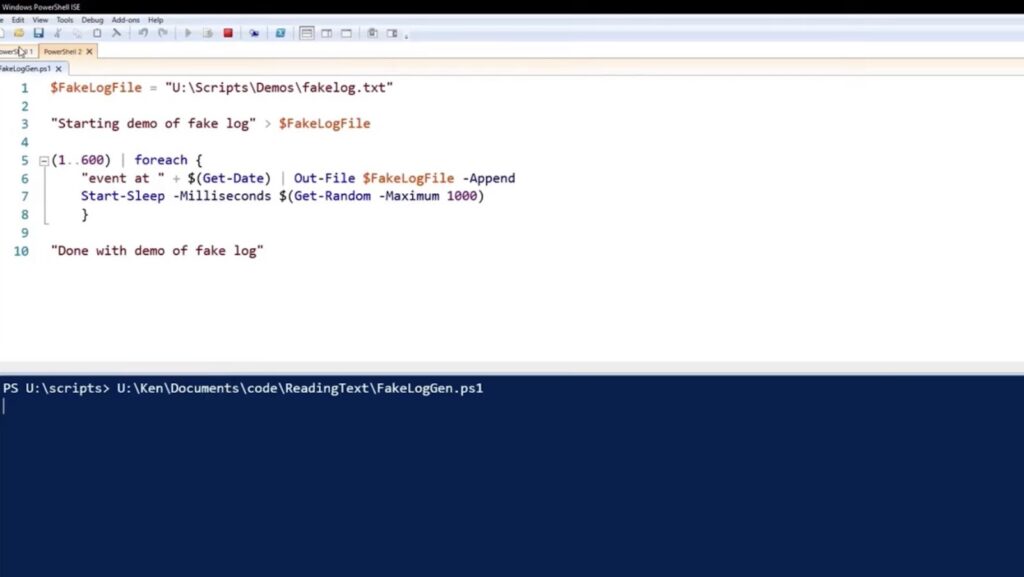

In the realm of PowerShell, the need often arises to peruse text files. Whether one automates tasks, scans log files, or seeks a streamlined method for accessing log contents, reading text files is a fundamental duty for a PowerShell user. Among the arsenal of PowerShell’s capabilities, the Get-Content command shines as a versatile and potent tool for text file reading. In this extensive guide, the journey begins to unveil the secrets of reading text files within this potent scripting language.

Introduction to PowerShell’s Get-Content Command

Within the vast landscape of PowerShell, a command-line shell and scripting language, an array of cmdlets and methods stand ready to read and manipulate text files. Be it log file processing, text data analysis, or extracting data from configuration files, PowerShell covers it all. Harnessing the innate strength of its built-in cmdlets and scripting prowess, efficient text file reading and parsing become second nature. The Get-Content command, a cmdlet in PowerShell, grants the power to read a file’s content, either storing it in a variable or displaying it in the console.

Understanding the Basics of Reading Text Files in PowerShell

Before plunging into the specifics of the Get-Content command, one must grasp the fundamentals of text file reading in PowerShell. PowerShell’s versatility extends beyond text files to encompass a wide array of file formats, including XML, CSV, and JSON. This breadth of compatibility ensures that PowerShell remains a go-to solution for handling various data types commonly encountered in the digital landscape.

- However, to navigate this landscape effectively, understanding how to read a text file serves as a foundational skill. At the core of text file reading is the need to specify the file’s path accurately. PowerShell offers two approaches for this: the absolute path and the relative path;

- An absolute path provides the full location of the file, starting from the root of the drive. This method ensures unambiguous file identification, regardless of the script’s current directory. For instance, “C:\Data\file.txt” is an absolute path that unequivocally points to a file named “file.txt” located in the “Data” folder on the C: drive;

- Conversely, a relative path specifies the file’s location concerning the current working directory. It offers a more concise way to reference files within the script’s operational context. For instance, if the script resides in the “Scripts” folder, referencing “file.txt” as a relative path implies it’s in the same directory as the script, simplifying the path to “file.txt.”

These foundational principles pave the way for more advanced text file reading techniques within PowerShell, ensuring precision and efficiency in file handling tasks.

Reading a Text File Using Get-Content Command

The Get-Content command serves as the primary conduit for text file reading in PowerShell. Incorporating the Get-Content command into PowerShell scripts involves specifying the file path and assigning the output to a variable or displaying it in the console.

```powershell

Get-Content -Path "C:\Logs\AppLog.txt"

```This command retrieves content from the “AppLog.txt” file in the “C:\Logs” directory via path parameters, returning each line as a string object. Similarly, content from multiple files can be obtained using the asterisk () character and the -Filter parameter. Output assignment to a variable is also possible.

Reading a Text File into a Variable Using Get-Content Command

The Get-Content command not only showcases content in the console but also enables storage of content in a variable. This storage empowers data manipulation and diverse operations.

```powershell

$content = Get-Content -Path C:\Logs\log.txt

```The -Raw parameter retrieves the entire file content as a single string instead of an array of strings.

Reading a Text File Line by Line in PowerShell using Get-Content

Occasionally, reading a text file line by line becomes necessary, especially with large files where processing one line at a time is preferred. PowerShell’s Get-Content cmdlet comes to the rescue, allowing retrieval of file content and subsequent line-by-line processing.

Search and Filter File Contents

Beyond real-time log monitoring, PowerShell accommodates the filtering of log entries based on specific criteria. This is achieved by combining the Get-Content cmdlet with other cmdlets like Where-Object or Select-String. An example demonstrates filtering based on a keyword or a regular expression pattern.

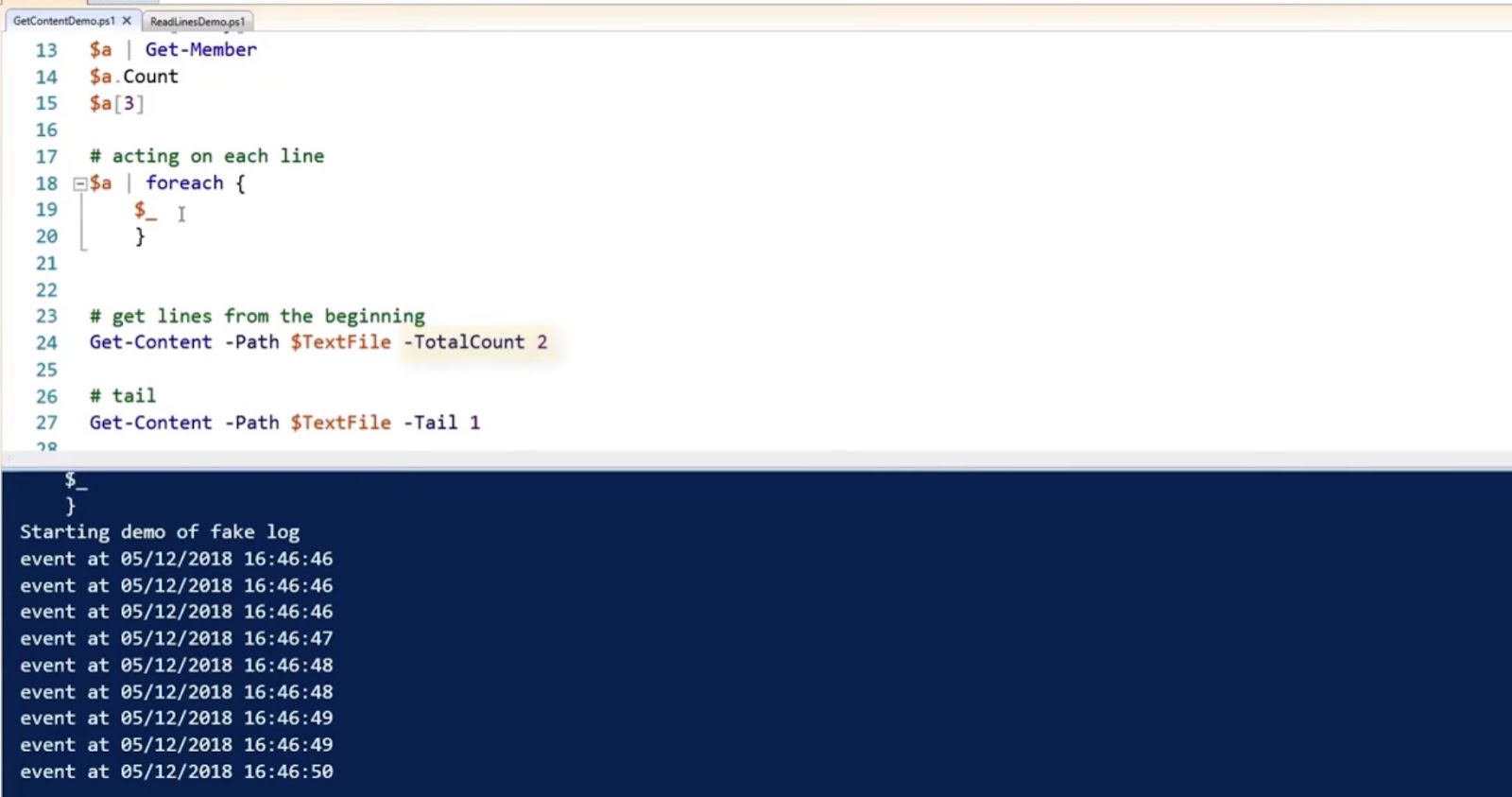

Get the First or Last “N” Lines of a Text file

To obtain specific portions of a text file, PowerShell offers parameters like -TotalCount for fetching the first few lines and -Tail for obtaining the last “N” lines. When dealing with large text files, precision in extracting relevant content can significantly improve efficiency. Utilizing the -TotalCount parameter allows PowerShell users to cherry-pick the initial lines they require, providing a tailored view of the file’s beginning. Conversely, the -Tail parameter offers the power to access the concluding lines of the file, ideal for quickly reviewing a text file’s most recent entries.

Reading Specific Lines of a Text File

For extracting particular lines from a text file, PowerShell provides methods like Select-Object with the -Index parameter. This capability proves invaluable when precision is essential. By specifying the line number through -Index, users can surgically retrieve specific lines from a text file. This feature finds practical applications in scenarios where only specific data points or records need attention, streamlining data extraction and analysis tasks.

Skipping Header and Footer Lines

When dealing with files containing header and footer lines to be skipped, PowerShell introduces the -Skip parameter in combination with the Select-Object cmdlet. This handy tool enables users to filter out unwanted content easily. Whether dealing with files that contain metadata at the beginning or summaries at the end, the -Skip parameter streamlines the process of accessing the core data within files. It’s a valuable asset when aiming to focus solely on the content that matters.

Reading the Content of a File Using Streamreader in PowerShell

Leveraging the System.IO.StreamReader class allows potent file content reading in PowerShell. The StreamReader class is versatile, accommodating files of any size and format. When it comes to handling files with varying sizes and formats, the System.IO.StreamReader class stands as a stalwart companion in the PowerShell toolkit. Whether dealing with massive log files or peculiar file structures, the StreamReader offers flexibility and efficiency. It reads data in chunks, sparing memory while providing a consistent stream of information, making it a top choice for professionals handling diverse file types.

Reading Large Text Files in PowerShell

Dealing with large text files necessitates efficient reading techniques in PowerShell. The performance and memory impact of reading large text files cannot be understated, and PowerShell provides several methods to address these challenges effectively.

Using the -ReadCount Parameter

The -ReadCount parameter of the Get-Content cmdlet facilitates reading a defined number of lines at once, minimizing memory consumption. When working with substantial text files, memory management becomes paramount. The -ReadCount parameter allows users to strike a balance between processing speed and memory usage. By specifying the number of lines to read at once, PowerShell ensures that even the largest files can be handled efficiently, preventing memory exhaustion and improving overall performance.

Reading Chunk by Chunk using StreamReader

Another approach for handling large text files efficiently involves reading the file in chunks using the StreamReader class. When text files reach enormous proportions, reading them line by line becomes impractical. StreamReader excels in this scenario by reading the file in manageable chunks. Each chunk represents a portion of the file, reducing memory usage and enabling the efficient processing of even the largest files.

Read CSV, JSON, and XML Files in PowerShell

PowerShell excels at handling structured data in formats like CSV, JSON, and XML. Whether dealing with spreadsheets, data interchange formats, or structured documents, PowerShell provides specialized cmdlets and methods for efficient data extraction and manipulation.

Get Content from CSV files

For structured data in CSV format, PowerShell offers the ConvertFrom-Csv cmdlet. When working with CSV files, PowerShell’s ConvertFrom-Csv cmdlet shines. It automatically detects delimiters and converts CSV data into PowerShell objects, making data manipulation and analysis a breeze. Whether you’re processing financial data or managing inventory records, ConvertFrom-Csv simplifies the handling of tabular data.

Reading a JSON file

To read JSON files, Get-Content and ConvertFrom-Json cmdlets convert JSON data into PowerShell objects. JSON, a popular data interchange format, finds extensive use in web services and configuration files. PowerShell seamlessly integrates JSON handling through the Get-Content and ConvertFrom-Json cmdlets. By retrieving JSON content with Get-Content and converting it to PowerShell objects with ConvertFrom-Json, PowerShell users can effortlessly work with structured data from JSON files.

Reading an XML file

For XML files, Get-Content and type accelerators transform XML content into PowerShell objects. XML, a versatile markup language, often stores structured data. PowerShell provides a straightforward path for extracting this information. Utilizing Get-Content and type accelerators, PowerShell converts XML content into objects, facilitating easy manipulation and interpretation of XML data. Whether parsing configuration files or processing data feeds, PowerShell simplifies XML handling.

Common Errors While Using the Get-Content cmdlet in PowerShell

Several common errors might crop up while using the Get-Content command, along with their remedies. Troubleshooting issues is an integral part of working with PowerShell’s file reading capabilities. Common errors like “Cannot find the file specified” often arise from incorrect paths and can be resolved by verifying file locations. Errors related to files being in use by other processes can be addressed by closing the conflicting programs. Additionally, handling large files effectively using the -ReadCount parameter helps prevent memory-related errors.

Best Practices for Using Get-Content Command in PowerShell

Adhering to best practices enhances the efficacy of using the Get-Content command in PowerShell. Always specifying file paths using absolute or relative paths promotes clarity and consistency. Optimizing memory usage by judiciously applying the -ReadCount parameter becomes crucial when dealing with large files. Storing content in variables facilitates data manipulation, while robust error handling techniques like try-catch blocks ensure smooth execution. Leveraging ConvertFrom- cmdlets for specific data formats further streamlines the process.

Conclusion

The realm of reading text files in PowerShell is a fundamental task in data processing and automation. Text files are ubiquitous in the computing world, serving as repositories for logs, configuration settings, data exports, and more. Harnessing PowerShell’s capabilities for text file manipulation empowers users to extract valuable insights, automate routine tasks, and streamline data-driven operations.

- The trio of tools – Get-Content, StreamReader, and ReadAllText – offers versatile solutions for text file reading in different scenarios. Get-Content excels in simplicity and ease of use, making it an ideal choice for quick inspections or when reading smaller files. StreamReader, on the other hand, shines when dealing with large or complex text files, ensuring efficient memory usage. Meanwhile, ReadAllText simplifies the process of reading an entire file into a single string, suitable for cases where a holistic view of the text is required;

- Whether processing line by line, chunk by chunk, or as a whole, PowerShell caters to diverse reading needs. It provides the means to navigate through text files efficiently, whether extracting specific lines, filtering content, or handling structured data in formats like CSV, JSON, or XML;

- Furthermore, PowerShell’s robust error handling mechanisms, exemplified by try-catch blocks, ensure scripts can gracefully handle unexpected situations, such as missing files or access errors. This robustness enhances the reliability of automation workflows.

Armed with the examples presented in this guide, one is equipped to tackle text file reading within PowerShell scripts and automation workflows. From the basics of file path specification to advanced techniques for large files and structured data, PowerShell empowers users to harness the full potential of text files in their computing endeavors. Whether you’re a sysadmin automating system log analysis or a data analyst parsing data exports, PowerShell’s text file reading capabilities are a powerful ally in your toolkit.

FAQ

PowerShell provides methods like Select-Object with the -Index parameter for extracting particular lines from a text file.

You can skip header and footer lines using the -Skip parameter in combination with the Select-Object cmdlet.

The StreamReader class offers efficient file content reading, especially for handling large and diverse file formats in PowerShell.

PowerShell provides techniques like using the -ReadCount parameter or reading files in chunks with StreamReader for efficient handling of large text files.

Yes, PowerShell offers specialized cmdlets for reading and manipulating structured data formats like CSV, JSON, and XML.

Common errors include path-related issues and memory-related errors, which can be resolved by verifying file locations and using memory-efficient techniques.

Best practices include specifying clear file paths, optimizing memory usage with the -ReadCount parameter, error handling with try-catch blocks, and using ConvertFrom- cmdlets for specific data formats.

PowerShell’s text file reading capabilities empower users to automate tasks, extract insights, and handle data-driven operations efficiently.