Unleash the Power of Web Requests with Invoke-WebRequest

In the ever-evolving landscape of web development and automation, the ability to seamlessly interact with online resources is paramount. Whether you’re a seasoned developer, a sysadmin looking to automate tasks, or simply a curious tech enthusiast, understanding how to harness the potential of Invoke-WebRequest is a skill that can empower you to navigate the digital realm with finesse.

Invoke-WebRequest, often hailed as a hidden gem within the PowerShell arsenal, is a versatile cmdlet that opens a gateway to the World Wide Web from the comfort of your command line. This powerful tool equips you with the capability to retrieve web content, interact with REST APIs, scrape data from websites, and even perform web-based authentication, all within the familiar environment of PowerShell.

As we delve into this comprehensive guide, we will uncover the intricacies of Invoke-WebRequest, exploring its myriad applications, tips, and tricks. Whether you’re seeking to automate repetitive web-related tasks, extract valuable data from online sources, or enhance your web development toolkit, this article will serve as your essential companion on your journey to mastering Invoke-WebRequest. So, fasten your seatbelts, as we embark on a fascinating journey through the digital realm.

Downloading a File: A Step-by-Step Guide for Tech Enthusiasts

So, you’re eager to download a file, and in this thrilling example, we’re diving into the world of World of Warcraft to grab the ElvUI addon. But here’s the twist – we’re going to do it with some PowerShell magic!

PowerShell Setup:

First things first, we need to set up PowerShell to work its wonders. We’ll fetch the download link using Invoke-WebRequest:

$downloadURL = 'http://www.tukui.org/dl.php'

$downloadRequest = Invoke-WebRequest -Uri $downloadURLNow, let’s break down what’s happening here. We set the $downloadURL variable to hold the URL of the webpage we want to visit. Then, we use $downloadRequest to store the results of the Invoke-WebRequest cmdlet, fetching the page content from the given URL.

Exploring the Content:

Before we proceed further, let’s take a peek into what’s inside $downloadRequest. It’s like unwrapping a treasure chest! We’ll initially focus on the Links property, which conveniently holds all the links found on the website:

$downloadRequest.LinksThis is a goldmine for us, as it makes parsing through links a breeze. But wait, there’s more to uncover!

Hunting for ElvUI:

Now, let’s embark on a quest to find the ElvUI download link hidden among the myriad of links. To do this, we’ll filter the links using Where-Object and look for those containing keywords like “Elv” and “Download”:

$elvLink = ($downloadRequest.Links | Where-Object {$_ -like '*elv*' -and $_ -like '*download*'}).hrefBingo! We’ve tracked down the elusive ElvUI download link and stored it in the $elvLink variable. Victory is within reach!

Two Ways to Download:

Now, the time has come to claim your prize. There are two methods at your disposal:

Method 1: Using Contents Property

In this approach, we’ll use Invoke-WebRequest to fetch the file’s content and then write all the bytes to a file. It goes like this:

$fileName = $elvLink.Substring($elvLink.LastIndexOf('/')+1)

$downloadRequest = Invoke-WebRequest -Uri $elvLink

$fileContents = $downloadRequest.ContentThe code above extracts the file name from the link and stores the content in the $fileContents variable. But we’re not done yet.

To complete the mission, we need to write those precious bytes to a file using [io.file]::WriteAllBytes:

[io.file]::WriteAllBytes("c:\download\$fileName",$fileContents)Method 2: Using -OutFile Parameter

Alternatively, you can opt for a more streamlined approach by using the -OutFile parameter with Invoke-WebRequest. Here’s how:

$fileName = $elvLink.Substring($elvLink.LastIndexOf('/')+1)

$downloadRequest = Invoke-WebRequest -Uri $elvLink -OutFile "C:\download\$fileName" -PassThruDon’t forget to add -PassThru if you want to retain the request results in the $downloadRequest variable.

Success! You’ve Got the File:

Now, the moment of truth! Run the code, and voilà! You should now find the downloaded file nestled comfortably in “C:\download”.

A Word of Validation:

The $downloadRequest variable holds the results of the request. You can use this to verify that everything went according to plan. Always a handy tool for a tech-savvy adventurer.

Exploring File Downloads with Redirects

Downloading files from websites that employ redirects can be a bit of a puzzle. Today, we’ll use the example of downloading WinPython to uncover the secrets behind dealing with these redirects. We’ll walk you through the process, step by step.

Understanding Invoke-WebRequest Parameters

Before we dive into the code, let’s dissect some key parameters of the Invoke-WebRequest cmdlet:

- MaximumRedirection 0: This nifty parameter prevents automatic redirection. Setting it to zero allows us to manually manage redirection data;

- ErrorAction SilentlyContinue: By using this, we’re telling PowerShell to overlook redirection errors. However, keep in mind that this might hide other potential errors. It’s the price we pay for keeping our data tidy in the $downloadRequest variable;

- UserAgent [Microsoft.PowerShell.Commands.PSUserAgent]::FireFox: Including this parameter sends along a user agent string for FireFox, making the download possible on certain websites where it otherwise might not work.

The Initial Code

Here’s the initial setup:

$downloadURL = 'https://sourceforge.net/projects/winpython/files/latest/download?source=frontpage&position=4'

$downloadRequest = Invoke-WebRequest -Uri $downloadURL -MaximumRedirection 0 -UserAgent [Microsoft.PowerShell.Commands.PSUserAgent]::FireFox -ErrorAction SilentlyContinueNow, let’s break down the code further:

1. Retrieving the Redirect Link

The status description should ideally be “Found.” This tells us that there’s a redirect link stored in the header information, accessible via $downloadRequest.Headers.Location. If it’s found, we proceed.

2. Extracting the File Name

We delve into the content property and extract the file name. The $fileName variable uses Select-String to locate a string that matches the pattern ‘WinPython-.+exe’. This gives us the file name we’re after.

Adding Logic for Unexpected Responses

To handle unexpected responses, we’ve included a couple of Switch statements:

Switch ($downloadRequest.StatusDescription) {

'Found' {

# Code for handling redirection

}

Default {

# Code for handling unexpected status descriptions

}

}

Switch ($downloadRequest.BaseResponse.ContentType) {

'application/octet-stream' {

# Code for handling downloadable content

}

Default {

# Code for handling unexpected content types

}

}Now, let’s run the full code, and we’ll check ‘C:\download’ to verify the results!

Tracking the Progress

While the download is in progress, a progress indicator will be displayed. Please note that sometimes it may not accurately represent the actual progress.

Upon completion, we’ve successfully downloaded all 277MB of WinPython and saved it to the appropriate location. You’ve successfully navigated the maze of redirects and emerged victorious!

Exploring Web Content with PowerShell’s Invoke-WebRequest

When working with web content in PowerShell, the Invoke-WebRequest cmdlet becomes your trusty sidekick. It allows you to fetch data from websites and interact with it programmatically. In this guide, we will dive into the intricacies of parsing web content using Invoke-WebRequest and show you how to extract valuable information. Our primary focus will be on the PowerShell subreddit, where we’ll gather post titles and their associated links. Let’s embark on this journey step by step.

Setting Up Your Web Request

Before we delve into parsing, we need to establish a connection with the target website. In our case, it’s the PowerShell subreddit. We define the URL we want to work with and use Invoke-WebRequest to fetch its content. Here’s how you set it up:

$parseURL = 'http://www.reddit.com/r/powershell'

$webRequest = Invoke-WebRequest -Uri $parseURLNow that we have the web content, let’s examine the $webRequest variable. It holds a wealth of information that we can explore to our advantage.

Inspecting the Web Content

- RawContent: This property gives us the content in its raw form, including header data. Useful when you need to analyze the complete response from the server;

- Forms: Discover any forms present on the web page. This will be crucial when dealing with interactive websites that require user input;

- Headers: This property contains just the returned header information. Useful for examining the metadata associated with the web page;

- Images: If there are images on the page, this property stores them. Valuable for scraping images from websites;

- InputFields: Identify any input fields found on the website. This is crucial for interacting with web forms;

- Links: Extract all the links found on the website. It’s provided in an easy-to-iterate format, making it handy for navigating through linked content;

- ParsedHTML: This property opens the door to the Document Object Model (DOM) of the web page. The DOM is a structured representation of the data on the website. Think of it as a virtual blueprint of the web page’s elements.

To explore these properties further and uncover more hidden gems, you can use the Get-Member cmdlet. However, for the scope of this article, we’ll concentrate on the properties mentioned above.

Digging Deeper with ParsedHTML

Our main objective is to extract titles and links from the PowerShell subreddit. To accomplish this, we need to interact with the DOM, and the ParsedHTML property provides us with the means to do so. Let’s proceed step by step:

Identifying the Elements: To extract the titles and links, we need to identify the HTML elements that contain this information. Using browser developer tools (like Edge’s F12), we can inspect the web page and determine that the titles are enclosed in a <p> tag with the class “title.”

Accessing the DOM: We can use PowerShell to access the DOM and search for all instances of <p> tags with the “title” class. Here’s how you can do it:

$titles = $webRequest.ParsedHTML.getElementsByTagName('p') | Where-Object {$_.ClassName -eq 'title'}This code retrieves all the elements that match our criteria and stores them in the $titles variable.

Extracting Text: To verify that we have captured the title information, we can extract the text from these elements:

$titles | Select-Object -ExpandProperty OuterTextThis will display the titles of the posts on the PowerShell subreddit.

Extracting Titles from Web Content: A PowerShell Solution

Step 1: Retrieving Titles

One of the initial challenges is obtaining the titles while excluding any appended text, such as “(self.PowerShell).” Here’s how we can tackle this issue:

- We start by utilizing a web request object, $webRequest, to retrieve the web content;

- We use the getElementsByTagName(‘p’) method to target HTML elements of type ‘p.’;

- To filter only the titles, we employ the Where-Object cmdlet, checking if the element’s class is ‘title.’

Now, let’s enhance this process further by performing the following steps:

- Initialize variables like $splitTitle, $splitCount, and $fixedTitle for better code organization;

- Split the title into an array using whitespace as the delimiter ($splitTitle);

- Determine the number of elements in the array ($splitCount);

- Nullify the last element in the array, effectively removing the unwanted text;

- Join the array back together and trim any extra whitespace to obtain the cleaned title.

This approach allows us to obtain titles without extraneous information, ensuring the accuracy and usefulness of the extracted data.

Step 2: Matching Titles with Links

With the titles successfully retrieved, our next objective is to associate each title with its corresponding link. Here’s how we can achieve this:

- Upon inspecting the web content, we discover that the outerText property of the links matches our titles stored in $titles;

- We create a custom object that includes an index, title, and link for each matched pair.

To facilitate this process, we need to perform some string manipulation on the links to extract the URL and ensure it’s properly formatted. Follow these steps:

- Initialize an index variable $i to ensure proper iteration;

- Create a .NET ArrayList to store our custom objects, named $prettyLinks;

- Iterate through each title in the $titles array;

- For each title, search through the Links property of $webRequest to find matching titles;

- Perform the necessary string manipulations to extract and format the link URL;

- Create a custom object containing the index, title, and link;

- Add the custom object to the $prettyLinks array;

- This approach results in a well-structured array of custom objects that pair titles with their corresponding links, providing a cohesive and organized dataset.

Now, let’s take a closer look at the code and the contents of the $prettyLinks variable to observe the successful execution of our solution.

Insightful Exploration of Object and Data Utility

The given object is laden with potential, extending a versatile range of functionalities and utilities. This attribute of availability presents a myriad of possibilities on the effective use and manipulation of the attached information. The object can be tailored, manipulated, and applied in multiple domains, enabling users to harness its capacities to meet diverse needs and resolve a spectrum of issues. The myriad implications of the object span across various fields, demonstrating its inherent adaptability and the potential to unlock new dimensions of information processing.

A Glimpse into Practical Implementation

For those who are curious about leveraging the object’s capabilities, below is an illustrative example showcasing how one might deploy the provided code:

$browsing = $true

While ($browsing) {

$selection = $null

Write-Host "Choose a [#] from the list of titles below!"`n -ForegroundColor Black -BackgroundColor Green

ForEach ($element in $elegantLinks) {

Write-Host "[$($element.Index)] $($element.Title)"`n

}

Try {

[int]$selection = Read-Host 'Indicate your choice with [#]. To exit, press "q"'

if ($selection -lt $elegantLinks.Count) {

Start-Process $elegantLinks[$selection].Link

} else {

$browsing = $false

Write-Host 'Invalid option or "q" selected, browsing terminated!' -ForegroundColor Red -BackgroundColor DarkBlue

}

}

Catch {

$browsing = $false

Write-Host 'Invalid option or "q" selected, browsing terminated!' -ForegroundColor Red -BackgroundColor DarkBlue

}

}This illustrative snippet is engineered to perpetuate a loop until the user opts to exit by entering “q” or selecting an invalid option. It meticulously enumerates all available titles, prompting the user to specify the desired one by its associated number. Upon receiving the input, it initiates the default web browser, navigating directly to the corresponding link of the selected title.

Versatile Applications of the Data

This instance is merely a fragment of the plethora of applications and implementations available. A series of screenshots have been provided to visually represent the dynamic functionality and diverse applications of the code in real-time scenarios. These visual aids serve to depict the multifaceted nature of the object and how it can be seamlessly integrated into different domains to extract value and solve complex problems.

User Interaction Examples

Example 1:

- User Input: 17;

- Outcome: The process is initiated successfully, rendering the expected outcome.

Example 2:

- User Input: q;

- Outcome: The system recognizes the termination command, and the browsing session is concluded gracefully.

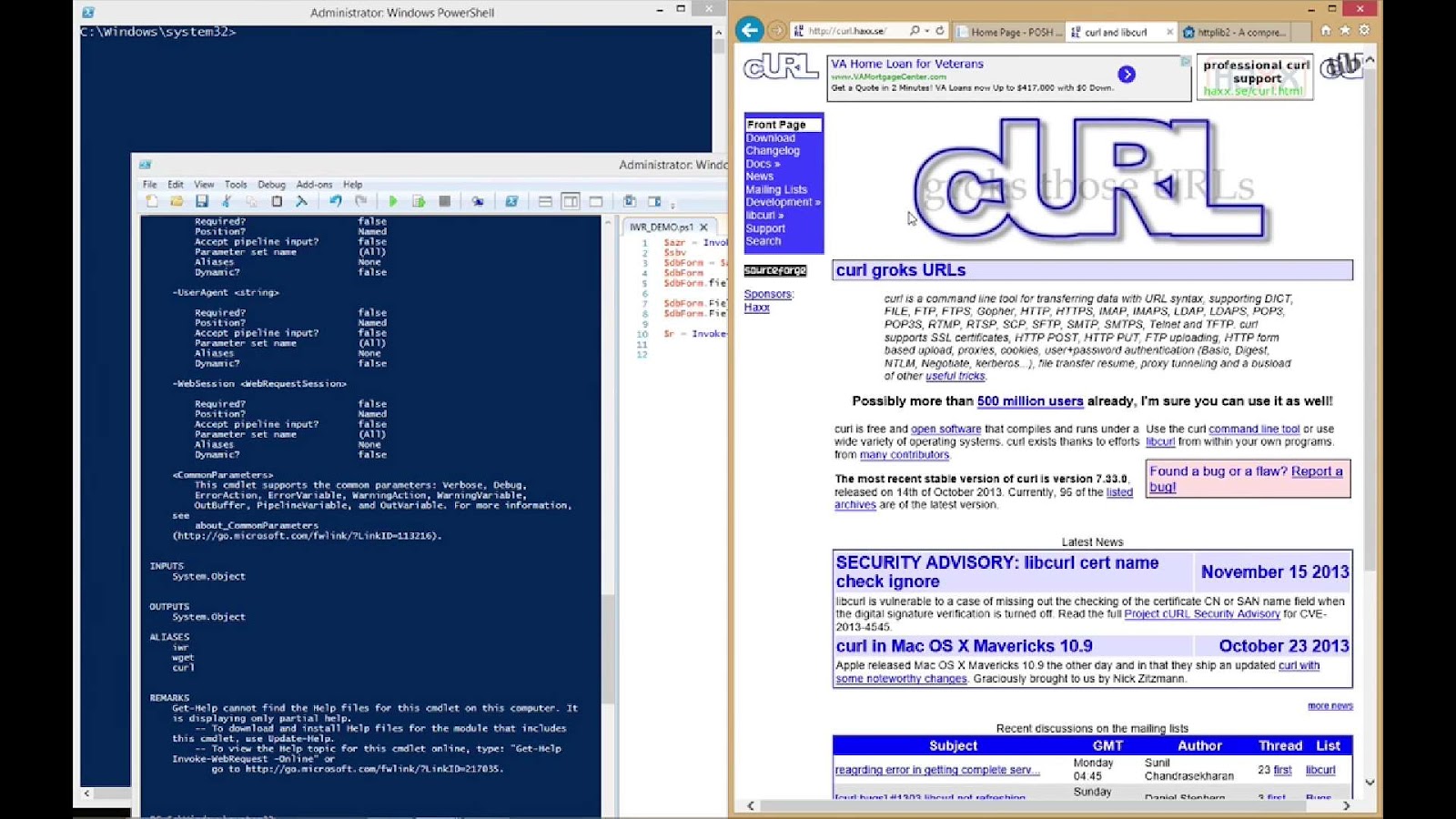

Understanding Form Handling with Invoke-WebRequest

Working with web forms using PowerShell’s Invoke-WebRequest offers a powerful way to interact with websites, automate tasks, and extract valuable information. In this guide, we’ll delve into the world of web forms and demonstrate how to harness their potential. We’ll use an example involving Reddit to illustrate each step comprehensively.

1. Retrieving Web Forms

When you visit a web page, there are often forms waiting to be filled out and submitted. Invoke-WebRequest allows us to interact with these forms programmatically. To begin, let’s explore how to obtain and manipulate these forms.

$webRequest = Invoke-WebRequest 'http://www.reddit.com'

$searchForm = $webRequest.Forms[0]

$webRequest stores the content of the Reddit homepage.

$webRequest.Forms contains an array of forms present on the page.

$searchForm now holds the specific form we're interested in.2. Understanding Form Properties

Forms have essential properties that guide our interactions. Knowing these properties is key to effectively working with web forms:

- Method: This property dictates how the request should be sent. Common methods are GET and POST;

- Action: It specifies the URL where the request is sent. Sometimes it’s a full URL; other times, it’s a part we need to combine with the main URL;

- Fields: This is a hash table containing the data we want to submit in the request.

Let’s inspect these properties for our $searchForm:

$searchForm.Method

$searchForm.Action

$searchForm.Fields3. Modifying Form Data

Once we’ve identified the form and its properties, we can manipulate its data. For instance, if we want to search Reddit for “PowerShell,” we can set the “q” field like this:

$searchForm.Fields.q = 'PowerShell'It’s crucial to double-check our modifications to ensure they are as intended:

$searchForm.Fields4. Sending the Request

Now, let’s format our request and initiate the Reddit search:

$searchReddit = Invoke-WebRequest -Uri $searchForm.Action -Method $searchForm.Method -Body $searchForm.FieldsBreaking down the request:

- Uri: We use $searchForm.Action to specify the full URL;

- Method: We utilize $searchForm.Method to ensure we use the correct HTTP method, as specified by the form;

- Body: We employ $searchForm.Fields to send the data as key-value pairs, in this case, “q” = “PowerShell.”

5. Validating and Parsing Results

With the search request completed, we can validate the data and even extract specific information from the results. For instance, to retrieve links from the search results:

$searchReddit.Links | Where-Object {$_.Class -eq 'search-title may-blank'} | Select-Object InnerText, HrefThis code filters the links with the specified class and extracts their inner text and URLs.

6. Complete Example

Here’s the entire code example for searching Reddit with PowerShell:

$webRequest = Invoke-WebRequest 'http://www.reddit.com'

$searchForm = $webRequest.Forms[0]

$searchForm.Fields.q = 'PowerShell'

$searchReddit = Invoke-WebRequest -Uri $searchForm.Action -Method $searchForm.Method -Body $searchForm.Fields

$searchReddit.Links | Where-Object {$_.Class -eq 'search-title may-blank'} | Select-Object InnerText, HrefBy following these steps, you can harness the power of Invoke-WebRequest to automate web interactions, gather data, and streamline your workflow. Experiment with different websites and forms to unlock endless possibilities for automation and information retrieval.

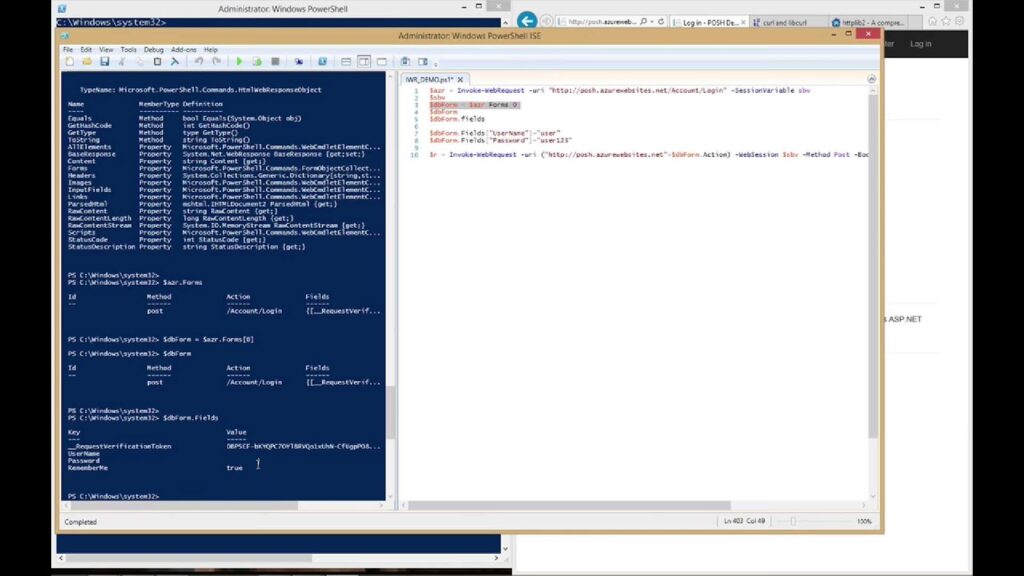

Accessing Websites through Scripted Login Procedures

Employing Invoke-WebRequest proves instrumental in scripting access to various websites, enabling automated interaction with web resources. This methodological approach entails a few essential steps, to ensure smooth and effective execution. Below is a comprehensive guide on these steps, designed to assist users in creating, refining, and utilizing scripted login procedures.

1. Employing an Appropriate User Agent

Typically, setting the userAgent to Firefox is highly recommended. Even though this step isn’t mandatory for every website, adopting it promotes the secure and general compatibility of scripts with various sites, mitigating the risk of access denial due to unrecognized or unsupported user agents. Firefox is widely accepted and recognized by a myriad of websites, ensuring a higher success rate during scripted interactions.

2. Initializing a Session Variable

The utilization of the sessionVariable parameter is pivotal, as it facilitates the creation of a variable responsible for maintaining the session and storing cookies. This ensures the persistence of the session throughout the interaction, allowing seamless navigation and transaction between different sections or pages of the website without the need to repeatedly login. Proper session management is crucial for automation scripts, especially when dealing with websites that have complex navigation structures and stringent session policies.

3. Form Population with Login Details

The correct form needs to be identified and populated with the necessary login details. The credentials can be securely stored in the $credential variable through the Get-Credential command. For instance, if one is to interact with Reddit, the credentials can be stored as follows:

$credential = Get-Credential

$uaString = [Microsoft.PowerShell.Commands.PSUserAgent]::FireFox

$webRequest = Invoke-WebRequest -Uri 'www.reddit.com' -SessionVariable webSession -UserAgent $uaStringImportant Remark

While utilizing the -SessionVariable parameter, the “$” symbol should not be included in the variable name. This is crucial to avoid syntax errors and ensure the proper functioning of the script.

4. Identification and Utilization of Correct Forms

The $webRequest.Forms command is used to access all forms on the website. Identifying the correct form is vital for successful login. For example, the ID of the needed form on Reddit is “login_login-main.” This knowledge enables the extraction of the specific form as shown below:

$loginForm = $webRequest.Forms | Where-Object {$_.Id -eq 'login_login-main'}5. Verification of the LoginForm Fields

After acquiring the desired form, verifying $loginForm.Fields is essential to confirm its relevance and to discern the properties that need to be set. Thorough verification ensures that the scripts interact with the correct elements on the page, preventing errors and unintended consequences during execution. It also helps in understanding the structure of the form, facilitating the accurate population of the required fields with the appropriate values.

Setting Up and Logging In to a Website Using PowerShell

In this comprehensive guide, we’ll walk you through the process of setting up and logging in to a website using PowerShell. We’ll use practical code examples to illustrate each step. By the end of this tutorial, you’ll have a clear understanding of how to automate website logins using PowerShell.

Step 1: Storing Credentials

First, let’s store the login credentials in a secure manner. This ensures that sensitive information, such as the username and password, remains protected. To do this, we use the Get-Credential cmdlet.

$credential = Get-CredentialTip: For added security, consider exporting your credentials to an XML file and importing them when needed.

Step 2: Setting User Agent

To mimic a web browser, we’ll set the User Agent string to simulate a Firefox browser. This is done using the [Microsoft.PowerShell.Commands.PSUserAgent]::FireFox string.

$uaString = [Microsoft.PowerShell.Commands.PSUserAgent]::FireFoxStep 3: Initial Web Request

Now, let’s initiate a web request to the target website, in this case, ‘www.reddit.com’. We create a session variable, $webSession, to store cookies for the current session.

$webRequest = Invoke-WebRequest -Uri 'www.reddit.com' -SessionVariable webSession -UserAgent $uaStringInsight: This initial request sets up a session for maintaining state information, including cookies.

Step 4: Gathering Login Form Details

To log in, we need to locate and gather details about the login form on the website. We do this by inspecting the HTML and identifying the form’s unique identifier, in this case, ‘login_login-main’.

$loginForm = $webRequest.Forms | Where-Object {$_.Id -eq 'login_login-main'}Step 5: Populating User and Password Fields

To populate the user and password fields of the login form, we assign the values from the $credential variable.

$loginForm.Fields.user = $credential.UserName

$loginForm.Fields.passwd = $credential.GetNetworkCredential().PasswordCaution: Storing the password in the hash table as plain text is not recommended for security reasons. Consider more secure methods for handling passwords in production code.

Step 6: Attempting Login

Now, we’re ready to attempt the login using the gathered information and the web session we established.

$webRequest = Invoke-WebRequest -Uri $loginForm.Action -Method $loginForm.Method -Body $loginForm.Fields -WebSession $webSession -UserAgent $uaStringStep 7: Verifying Login

To verify if the login was successful, we check if the username appears in any of the web page’s links.

if ($webRequest.Links | Where-Object {$_ -like ('*' + $credential.UserName + '*')}) {

Write-Host "Login verified!"

} else {

Write-Host 'Login unsuccessful!' -ForegroundColor Red -BackgroundColor DarkBlue

}Note: This verification method may vary depending on the website’s structure.

Step 8: Using the Authenticated Session

With a successful login, you now have an authenticated session stored in $webSession. You can use this session to browse or interact with the website further.

Conclusion

In conclusion, Invoke-WebRequest is a powerful cmdlet in PowerShell that plays a crucial role in enabling automation, data retrieval, and web interaction within the Windows environment. Throughout this article, we have explored the various capabilities and applications of Invoke-WebRequest, including its ability to send HTTP requests, retrieve web content, and interact with RESTful APIs. We’ve also delved into its essential parameters, such as headers, cookies, and authentication, which allow for fine-grained control over web interactions.

As we’ve seen, Invoke-WebRequest is a versatile tool that can be used for a wide range of tasks, from web scraping and data extraction to monitoring and automating web-based workflows. Its integration with PowerShell makes it an invaluable asset for system administrators, developers, and IT professionals seeking to streamline their processes and access web resources efficiently.

To harness the full potential of Invoke-WebRequest, it’s essential to continue exploring its capabilities and experimenting with real-world scenarios. With a solid understanding of this cmdlet, users can unlock new possibilities in their PowerShell scripts and automate web-related tasks with ease. As technology evolves, Invoke-WebRequest remains a reliable and essential component of the PowerShell toolkit, helping users navigate the ever-expanding web-driven landscape of modern computing.